II. Teaching with Writing in an Age of AI

What are some of the risks to students of relying exclusively on AI?

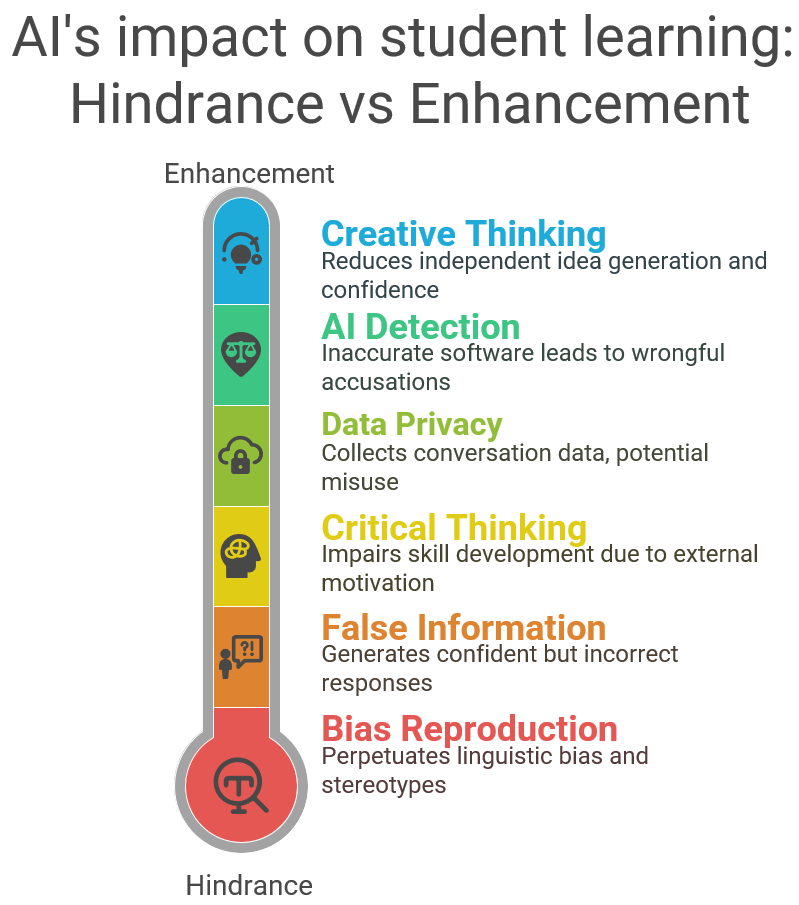

Visualizing the risks of AI in education: From enhanced support to potential harm:

The infographic below presents a spectrum of AI’s impact on student learning, illustrated as a thermometer ranging from “Enhancement” to “Hindrance.” It highlights six key areas where AI may negatively influence learning: reduced creative thinking, inaccurate AI detection tools, data privacy concerns, diminished critical thinking, the spread of false information, and the reinforcement of bias. The graphic serves as a visual aid for understanding where thoughtful integration of AI in education is needed.

AI Reliance Risks

Students know there are risks involved in using AI to complete assignments comparable to that of plagiarism or other academic misconduct. They may be less aware, however, of other risk factors:

| Bias Reproduction | Reproduction of online content biases: Because LLMs generate their text responses by scraping the internet for language patterns that already exist, it is inevitable that biases will be reproduced through its algorithms. This is an important risk for students and instructors to consider in terms of form and content. In terms of form, linguistic bias is perpetuated because AI relies on language models already steeped in what composition scholar Asao B. Inoue terms “White Language Supremacy”; so, when words like “essay,” “report,” or “academic” are used to prompt a response from the bot, it will draw on dominant discourse language patterns that have traditionally been associated with such terms, potentially further relegating historically marginalized varieties of English as not academic. In terms of content, stereotypes and falsehoods can be reproduced through the algorithmic categorization and combination of words and phrases—and the people, ideas, and events those words refer to. |

| Access Inequality | Unequal Access to AI Tools: While some students may be able to afford the latest versions of AI or the newest AI apps or plugins, others may not have the same access. Some students may feel uncomfortable sharing personal information with a chatbot. |

| False Information | Creation of false information: Chatbots are known to produce “hallucinations” or a confident response that is, in fact, false. Students may turn in work that includes false or fake data and must be made aware of the fallibility of AI chatbots. |

| Average Response | Generates Common, not insightful, Responses: Responses generated by AI represent the most common or the average response to a particular topic, rather than the most insightful or most in-depth. |

| Skill Development | Hinders Critical Thinking and Writing: Education research has suggested that when students’ drive to complete assignments is externally motivated (for instance, by points or grades), their ability to remember, synthesize, and evaluate information suffers. Students should be made aware that relying exclusively on AI chatbots to produce writing for them may hinder their learning, potentially making tasks in more advanced classes or in their desired profession more difficult. This is not to suggest that use of AI is always inconsistent with critical thinking. Students may engage with AI in ways that helps deepen or advance their thinking. Indeed, prompt engineering (or creating prompts and questions to elicit desired responses from a generative AI model) is a complex task that requires thought and persistence. However, if students rely solely on a chatbot to write a paper, they may be missing out on important skills. |

| Data Privacy | Collection of Personal Data: ChatGPT and other public AI tools collect data about the conversations they have with users. This data may include personal information, such as names and locations, as well as sensitive information, such as opinions and beliefs. This data could potentially be accessed by AI developers or by other parties. There is also a risk that the data collected by AI tools could be used for purposes other than those for which it was intended. For example, it could be used for targeted advertising or to influence individuals in ways that they may not be aware of. UW-Madison has an enterprise license with Microsoft Copilot, so information students share via Copilot stays within a UW-Madison cloud. |

| Detection Fallibility | Inaccurate AI detection software: Students are at risk of being wrongfully accused of plagiarism or cheating because of the inaccuracy of AI detection tools. Recent research has shown that multilingual writers are more often than their English monolingual peers the targets of wrongful accusations. |

| Creative Thinking | Risks to creative thinking and confidence: While some students may find generative AI useful as a brainstorming “partner,” one study demonstrated that students found it more difficult to develop ideas on their own after using generative AI to brainstorm. In addition, some students felt less confident in their own abilities as writers. |

| Wellbeing | Potential risks to student sense of wellbeing and belonging. |